Maintaining a Compliance Assurance Program

A well-designed and well-executed compliance assurance program provides an essential tool for improving and verifying business performance and limiting compliance risks. Ultimately, however, a compliance program’s effectiveness comes down to whether it is merely a “paper program” or whether it is being integrated into the organization and used in practice on a daily basis.

The following can show evidence of a living, breathing program:

- Comprehensiveness of the program

- Dedicated staff and resources

- Employee knowledge and engagement

- Management commitment and employee perception

- Internal operational inspections, “walkabouts” by management

- Independent insider, plus third-party audits

- Program tailoring to greatest risks

- Consistency and timeliness of exception (noncompliance/nonconformance) disclosures

- Tracking of timely and adequate corrective/preventive action completion

- Progress and performance monitoring

Best Practices

To achieve a compliance assurance program on par with world-class organizations, there are a number of best practices that companies should employ:

Know the requirements. This means maintaining an inventory of regulatory compliance requirements for each compliance program, as well as of state/local/contractual binding agreements applying to operations. It is vital that the organization keep abreast of current/upcoming requirements (federal, state, local).

Plan and develop the processes to comply. Identify and assess compliance risks, and then set objectives and targets for performance improvement based on top priorities. From here, it becomes possible to then define program improvement initiatives, assign and document responsibilities for compliance (who must do what and when), develop procedures and tools, and then allocate resources to get it done.

Assure compliance in operations. The organization needs to establish routine checks and inspections within departments to evaluate conformance with sub-process procedures. Process audits should be designed and implemented to cut across operations and sub-processes in order to evaluate conformance with company policies and procedures. Regulatory compliance audits should further be conducted to address program requirements (e.g., environmental, safety, mine safety, security). Audit performance must be measured and reported, and the expectations set for operating managers to take responsibility for compliance.

Take action on issues and problems. Capture, log and categorize noncompliance issues, process nonconformances, and near misses. Implement a corrective/preventive action process based on the importance of issues. Be disciplined in timely completion, close-out, and documentation of all corrective/preventive actions.

Employ management of change (MOC) process. Robust MOC processes help ensure that changes affecting compliance (to the facility, operations, personnel, infrastructure, materials, etc.) are reviewed for their impacts on compliance. Compliance should be assured before the changes are made. Failure to do so is one of the most common root causes of noncompliance.

Ensure management involvement and leadership. Set the tone at the top. The Board of Directors and senior executives must set policy, culture, values, expectations, and goals. It is just as important that these individuals are the ones to communicate across the organization, to demonstrate their commitment and leadership, to define an appropriate incentive/disincentive system, and to provide ongoing organizational feedback.

Maintaining Ongoing Compliance

The compliance assurance program must be a living, breathing program. As risks change, the program must be refreshed, refined, and redeployed. A management system framework can help ensure operational sustainability. A management system drives the auditing process and helps companies say what they will do, do what they say and, importantly, verify it.

Together, there is a real value at the intersection of a compliance assurance program and management systems. Management systems define the internal controls that are in place to reduce risks, prevent losses, and sustain and improve performance over time through the Plan-Do-Check-Act (PDCA) cycle of continual improvement.

Testing and Monitoring

Testing, monitoring, and measuring are crucial elements of this cycle. Without them, it is difficult to understand what is working and what needs improvement. Robust testing and monitoring programs can serve as early warning systems for identifying potential compliance risks before they become enforcement issues.

Compliance should be tested and monitored throughout each level of the organization. A strong testing program will evaluate the results of the compliance risk assessment and assign compliance risks to the business units and processes where they are most likely to occur, creating clear lines of responsibility and accountability. Key risks and the related controls should be tested periodically using statistically valid sampling methodologies, and monitoring activities should be performed on an ongoing basis. Doing so produces trend data that provides the rationale needed for making changes to underlying business processes, as well as emerging risks.

Ongoing compliance excellence relies on top management, operations managers, EHS personnel, and individual employees throughout the organization working together to build and sustain an organizational culture that places compliance on par with business performance. Senior management must focus on the overall culture of the company in terms of taking the necessary steps to reduce risk and make prevention part of daily operations. While it may be impossible to eliminate all risk exposure, a solid risk framework, assessment methodology, and compliance assurance program can help to prioritize risks for active management, sustained compliance, and positive business impacts.

Environment / Food Safety / Quality / Safety / Technology Enabled Business Solutions

Comments: No Comments

Technology & the 8 Functions of Compliance

Virtually every regulatory program—environmental, health & safety, security, food safety—has compliance requirements that call for companies to fulfill a number of common compliance activities. While they do not necessarily need to be addressed all at once or from the start, considering the eight functions of compliance (as outlined below) when designing a compliance Information Management System (IMS) helps define the starting point and build a vision for the “end point” when planning IMS improvements. These compliance functions translate into modules—facility profiles, employee counts, training tracking, corrective action tracking, auditing tasks, compliance calendars, documents and records management, permit tracking, etc.—that are instrumental in establishing or improving a company’s capability to comply.

8 Functions of Compliance

- Inventory means taking stock of what exists. The outcome of a compliance inventory is an operational and EHS profile of the company’s operations and sites. In essence, the inventory is the top filter that determines the applicability of regulatory requirements and guides compliance plans, programs, and activities. For compliance purposes, the inventory is quite extensive, including (but not limited to) the following:

- Activities and operations (i.e., what is done – raw material handling, storage, production processes, fueling, transportation, maintenance, facilities and equipment, etc.)

- Functional/operational roles and responsibilities (i.e., who does what, where, when)

- Emissions

- Wastes

- Hazardous materials

- Discharges (operational and stormwater-related)

- Safety practices

- Food safety practices

- Authorizations, permits & certifications provide a “license to construct, install, or operate.” Most companies are subject to authorizations/permits at the federal, state, and local levels. Common examples include air permits, operating permits, Title V permits, safe work permits, tank certifications, discharge permits, construction authorization. In addition, there may be required fire and building codes and operator certifications. Once the required authorizations, permits, and/or certifications are in place, some regulatory requirements lead companies to the preparation and updating of plans as associated steps.

- Plans are required by a number of regulations. These plans typically outline compliance tasks, responsibilities, reporting requirements, schedule, and best management practices to comply with the related permits. Common compliance-related plans may include SPCC, SWPPP, SWMP, contingency, food safety management, and security plans.

- Training supports the permits and plans that are in place. It is crucial to train employees to follow the requirements so they can effectively execute their responsibilities and protect themselves, company assets and communities. Training should cover operations, safety, security, environment, and food safety aimed at compliance with regulatory requirements and company standards and procedures.

- Practices in place involve doing what is required to follow the terms of the permits, related plans and regulations. These are the day-to-day actions (regulatory, best management practices, planned procedures, SOPs, and work instructions) that are essential for following the required processes.

- Monitoring & inspections provide compliance checks to ensure locations and operations are functioning within the required limits/parameters and the company is achieving operational effectiveness and performance expectations. This step may include some physical monitoring, sampling, and testing (e.g., emissions, wastewater). There are also certain regulatory compliance requirements for the frequency and types of inspections that must be conducted (e.g., forklift, tanks, secondary containment, outfalls). Beyond regulatory requirements, many companies have internal monitoring/inspection requirements for things like housekeeping, sanitation, and process efficiency.

- Records provide documentation of what has been done related to compliance—current inventories, plans, training, inspections, and monitoring required for a given compliance program. Each program typically has recordkeeping, records maintenance, and retention requirements specified by type. Having a good records management system is essential for maintaining the vast number of documents required by regulations, particularly since some, like OSHA, have retention cycles for as long as 30 years.

- Reports are a product of the above compliance functions. Reports from ongoing implementation of compliance activities often are required to be filed with regulatory agencies on a regular basis (e.g., monthly, quarterly, semi-annually, annually), depending on the regulation. Reports also may be required when there is an incident, emergency, recall, or spill.

Reliable Compliance Performance

Documenting procedures on how to execute these eight functions, along with management oversight and continual review and improvement, are what eventually get integrated into an overarching management system (e.g., environmental, health & safety, food safety, security, quality). The compliance IMS helps create process standardization and, subsequently, consistent and reliable compliance performance.

In addition, completing and organizing/documenting these eight functions of compliance provides the following benefits:

- Helps improve the company’s capability to comply on an ongoing basis

- Establishes compliance practices for when an incident occurs

- Creates a strong foundation for internal and 3rd-party compliance audits and for answering outside auditors’ questions (agencies, customers, certifying bodies)

- Helps companies know where to look for continuous improvement

- Reduces surprises and unnecessary spending on reactive compliance-related activities

- Informs management’s need to know

- Enhances confidence of others (e.g. regulators, shareholders/investors, insurers, customers), providing evidence of commitment, capability, reliability and consistency in the company’s compliance program

Comments: No Comments

Why Pursue an EHS Management System?

The discipline required to design and implement a compliant environmental, health & safety (EHS) management system can help organizations improve in many areas over and above the tasks, as defined.

- Identify and categorize the organization’s EHS risks. Once this information is known, management will be able to prioritize and then pick and choose how to reduce risks and liabilities to acceptable levels. These risks will be better controlled through strict management accounting. Employees will become more attuned to thinking outside the box to help management improve the overall operation.

- Develop work instructions and/or procedure to guide an employee’s actions and ensure that each EHS task is completed in a disciplined manner approved by management. This will reduce the risk to an organization of an employee accidentally making an environmental, health and/or safety mistake that causes the employee or others to be injured or worse; creates public awareness of the problem; or causes governmental inspections, fines, and loss of business.

- Provide management assurance that the company does, in fact, know and understand the legal and EHS requirements that the business must meet on a daily basis. These legal requirements will drive improvement in having up-to-date procedures and work instructions for employees to follow every day.

- Develop meaningful EHS goals and objectives. These objectives drive improvement in environmental and personal health & safety performance. They may also reduce internal costs by reducing trips to the hospital, payments for workers compensation, and employees on disability. Each business will have different goals that should change each year to ensure continuous improvement over time.

- Develop a strong training program. Well-written procedures and work instructions help define the actions required of employees to meet EPA and OSHA requirements and company directives. A well-trained workforce is a motivated and happy work force. Turnover is reduced, accidents and incidents are reduced, and production efficiencies increase. Employees are very aware when an organization takes time to assure each job requested is completed in the safest and most environmentally sound manner possible.

- Develop appropriate monitoring and measurement of key characteristics and requirements. These key performance indicators are based on regulations and laws intended to guide the organization’s actions in a direction of continuous improvement and compliance.

- Allow employees to audit and verify that the EHS management systems are functioning as designed and implemented. By continuously auditing each OSHA program and environmental function, the organization will discover issues of concern and non-conformances prior to an employee being injured or worse, having an environmental spill or incident, or incurring a governmental agency finding. This allows the company to choose a timeframe that will best help improve the situation without undue influence by outsiders.

- Design a fully functioning corrective/preventive action program to monitor issues of concern and/or non-conformance and the actions used to rectify each situation identified. As employees watch management fix problems, they will learn that management is concerned about continuous improvement and the employees will go back to making improvement suggestions. These suggestions will further drive improvement in areas outside the original EHS management systems.

- Look at the business model and the EHS management systems in a holistic fashion. By using this self-reflection and identifying improvement opportunities, management can direct responsibilities for improvement actions across many departments within the company. Each of these improvement opportunities will again help the bottom line and reduce the possibility of an EHS liability now or in the future.

- Know that you have done everything possible to maintain the business in a manner to meet all OSHA and EPA rules and regulations, as well as association requirements. The organization will have done everything possible to assure that the environment and the health & safety of employees are protected every day the doors are open for business. To a business owner, that knowledge is priceless.

Management Systems – Back to Basics

A management system is the organizing framework that enables companies to achieve and sustain their operational and business objectives through a process of continuous improvement. A management system is designed to identify and manage risks—safety, environmental, quality, business continuity, food safety (and many others)—through an organized set of policies, procedures, practices, and resources that guide the enterprise and its activities to maximize business value.

The management system addresses:

- What is done and why

- How it is done and by whom

- How well it is being done

- How it is maintained and reviewed

- How it can be improved

Creating an Effective and Valuable Management System

Each company’s management system reflects its unique culture, vision, and values. To be effective and valuable, the management system must be tailored and focused on how it can enhance the business performance of the organization. It must also be:

- Useful to people in the operations

- Intuitive—organized the way operations people think

- Flexible—making use of methods and tools as they are developed and documented

- Valuable from the outset—addressing the most critical risks and processes

- Linked to the business of the business (not “pasted on”), with ownership at the operational level

- A means to better align operational quality, safety, and environment with the business

Attributes of an effective management system are senior management expectations and guidance coupled with employee engagement. Importantly, a management system involves a continual cycle of planning, implementing, reviewing, and improving the way in which safety, quality, and environmental obligations and objectives are met. In its simplest form, this involves implementing the Plan, Do, Check, Act/Adjust (P-D-C-A) cycle for continuous improvement.

Auditing for Ongoing Compliance

The connection between management systems and compliance is vital in avoiding recurring compliance issues and in reducing variation in compliance performance. In fact, reliable and effective regulatory compliance is commonly an outcome of consistent and reliable implementation of a management system.

Conducting periodic audits is a practical way to test a management system’s implementation maturity and effectiveness. One of the many advantages of audits is that they help identify gaps so that corrective/preventive actions can be put into place and then sustained and improved through the management system.

Audits also help companies with continuous improvement initiatives; properly developed audit programs help measure results over time. To achieve best value, audits should emphasize finding patterns that can yield opportunities for learning and continual improvement, rather than “gotchas” for exceptions that are discovered.

Management System Standards

Several options are available for structuring management systems, whether they are certified by third-party registrars and auditors, self-certified, or used as internal guidance and for potential certification readiness.

The International Organization for Standardization (ISO) standards are some of the most commonly applied. The ISO standards for quality (ISO 9001), environment (ISO 14001), health & safety (OHSAS 18001), business continuity (ISO 22301), and food safety (FSSC 22000) have consistent elements, allowing organizations to more easily align their various management systems. Aligned management systems help companies to achieve improved and more reliable quality, environmental, and health & safety performance, while adding measurable business value.

Certification

Companies can become certified to each of the standards discussed above. Certification has a number of benefits, including the following:

- Meet customer or supply chain requirements

- Use outside drivers to maintain management system process discipline (e.g., periodic risk assessment, document management, compliance evaluation, internal audits, management review)

- Take advantage of third-party assessment and recommendations

- Improve standing with regulatory agencies (e.g., USEPA, OSHA, FDA, and state programs)

- Demonstrate the application of industry best practice in the event of incidents/accidents requiring defense of practices

However, if there is no market or other business driver, certification can lead to unnecessary additional cost and effort regarding management system development. Certification in itself does not mean improved performance—management system structure, operation, and management commitment determine that.

Business Value

There are a number of reasons to implement a management system. A properly designed and implemented management system brings value to organizations in a number of ways:

- Risk management

- Identify risks

- Set priorities for improvement, measurement, and reporting

- Provide great opportunity to identify, share, and learn best practices, while recognizing operational differences

- Protection of people

- Send people home the way they arrived at work

- Protect the public and the environment

- Compliance assurance

- Improve and sustain regulatory compliance

- Business value

- Continually improve quality, environmental, and safety performance across the organization (employee, public, equipment, infrastructure)

- Reduce incident costs and accrued liabilities

- Protect assets

- Reliability

- Assure processes, methods, and practices are in place, documented, and consistently applied

- Reduce variability in processes and performance

- Employee engagement

- Help employees to find and use current versions of all procedures and documents

- Provide a ready reference for field management to structure location-specific procedures

- Enable the effective transfer of standards, methods, and know-how in employee training, new job assignments, and promotions

Comments: No Comments

Why Safety Culture Matters

A recent episode of The Daily, a podcast from The New York Times, discussed the safety culture of the Boeing manufacturing plant in Charleston, South Carolina—the plant that builds the 737 MAX 8, the aircraft involved in two fatal crashes worldwide in the last six months.

Concerns About Culture

The Boeing 737 MAX 8 was grounded by the FAA on March 11, 2019 amid concerns that recently introduced flight control software contributed to both crashes. The subsequent scrutiny on the company brought attention to the safety culture of the Charleston plant.

Interviews in the podcast suggest common characteristics of a negative safety culture were present at Boeing. For example, there was reportedly significant pressure to meet production deadlines, including financial incentives for meeting hourly production goals. Some managers allegedly took defective parts and installed them on aircraft to meet these deadlines. One such incident described on the podcast episode included an attempt to rub off the red paint that is applied to defective parts to prevent installation. Related to defective parts, managers were reportedly pressured to reduce the number of parts damaged by employees during manufacturing. A former quality manager interviewed in the episode alleges that this pressure led to damaged parts being installed rather than reported to management or quality control.

Safety culture is often defined informally as “the way we do things around here” when it comes to safety practices. Essentially, safety culture is the product of the shared values, beliefs, norms, and organizational practices in a company about working safely. An organization’s safety culture is ultimately reflected in the way safety is managed in the workplace. The culture breaks down when the disregard for safety becomes “management practice.”

Characteristics of a Strong Safety Culture

A strong safety culture has several characteristics in common. Kestrel’s research into the topic of safety culture has identified two traits that are particularly important to an effective safety culture: leadership and employee engagement. Best-in-class safety cultures have robust systems in place to ensure that each of these traits, among others, is mature, well-functioning, and fully ingrained into the standard practices of the organization.

Organizations with strong safety cultures typically exhibit many of the following attributes:

- Communication. Communication is most effective when it comprises a combination of top-down and bottom-up interaction. Senior management sets the strategic goals and vision for the company’s safety program. It is vital that all levels of management (senior, middle, supervisory) communicate the strategy clearly to the workers who carry out the company’s mission. It is equally important that workers provide feedback on a practical level about what’s working and what’s not.

- Commitment. When it comes to safety, actions truly speak louder than words. A lack of commitment, as demonstrated by action (or lack thereof), comes across loud and clear to staff. For example, requiring staff to work excessive hours or use defective parts to meet productivity goals sends a clear message that productivity is more important than safety.

- Caring. Caring is about doing whatever is necessary to ensure employees return home safely every night. It involves showing concern for the personal safety of individuals, not just making a commitment to the overall idea of safety.

- Cooperation. Safety works best if management and workers are on the same team. Cooperation means working together to develop a strong safety program (e.g., management involving line workers in creating safety policies and procedures). It means management seeks feedback from workers about safety issues—and uses that feedback to make improvements. And it means there is no blame when incidents occur.

- Coaching. Coaching each other—peer to peer, supervisor to employee, even employee to management—is an important way to keep everyone on track. Coaching involves non-judgmentally providing feedback for improvements and, correspondingly, accepting and incorporating that feedback as constructive criticism.

- Procedures. There should be documented, clear procedures for every task. This not only prevents disagreement about what is required, it also shows commitment when things are put in writing. Procedures should be designed jointly by management and workers for practicality and to encourage improved cooperation, communication, and buy-in.

- Training. Training is a more formal, documented process for ensuring that employees follow safety processes and procedures. Formal training should happen frequently enough for employees to feel prepared to safely do their jobs.

- Tools. All equipment and tools should be in good repair, free of debris, and functioning as designed. Inadequate tools directly impact safety/protection and indirectly impact perception of management commitment. Boeing’s alleged practices send a clear message that safety is not as important as productivity.

- Personnel. There must be enough workers to do each task safely. The company should not sacrifice individual safety because of being understaffed (i.e., requiring shortcuts/overtime to meet production goals).

- Trust. Trust in the safety program, in senior management, and in each other is built when each of these characteristics is present and treated as a company-wide priority.

Benefits of a Best-in-Class Safety Culture

Strong safety performance is a cornerstone of any business. When these characteristics come together to create a best-in-class safety culture, everyone wins:

- Fewer accidents, losses, and disruptions

- Improved employee morale

- Increased productivity

- Lower workers compensation and insurance claims

- Improved compliance with OSHA regulations

- Improved reputation to attract new customers and employees and retain existing ones

- Better brand and shareholder value

Environment / Quality / Safety

Comments: No Comments

Business Continuity: Building a Resilient Organization

When business is disrupted, the costs can be substantial. Unfortunately, every organization is at risk from potential operational disruptions—natural disasters, fire, sabotage, information technology (IT) viruses, data loss, acts of violence. Recent world events have further challenged organizations to prepare to manage previously unthinkable situations that may threaten the future of the business.

Securing Company Assets

This goes beyond the mere Emergency Response Plan or disaster recovery activities that have been previously implemented. Organizations must now engage in a more comprehensive process to secure their companies’ assets (e.g., people, technology, products, and services). Today’s threats require implementation of an ongoing, interactive process that assures the continuation of the organization’s core business activities and data center(s) before, during, and, most importantly, after a major crisis event.

Creating a Resilient Organization

Business continuity planning helps ensure that companies have the resources and information needed to maintain service, reliability, and resiliency under adverse conditions. While companies can’t plan for everything, they can take steps to understand and effectively manage events that might compromise their products/services, supply chain, quality, security, and future as an organization.

A Business Continuity Plan ensures that all involved parties understand who makes decisions, how the decisions are implemented, and what the roles and responsibilities of participants are when an incident occurs. Through business continuity planning, companies are able to:

- IDENTIFY the human, property, and operational impacts of potential business threats

- EVALUATE the potential severity of associated risks

- ESTIMATE the likelihood of business threats occurring

- CREATE timelines for restoration and strategies that proactively mitigate the most pressing business threats, take advantage of opportunities that lie ahead, and provide for a more resilient and sustainable future

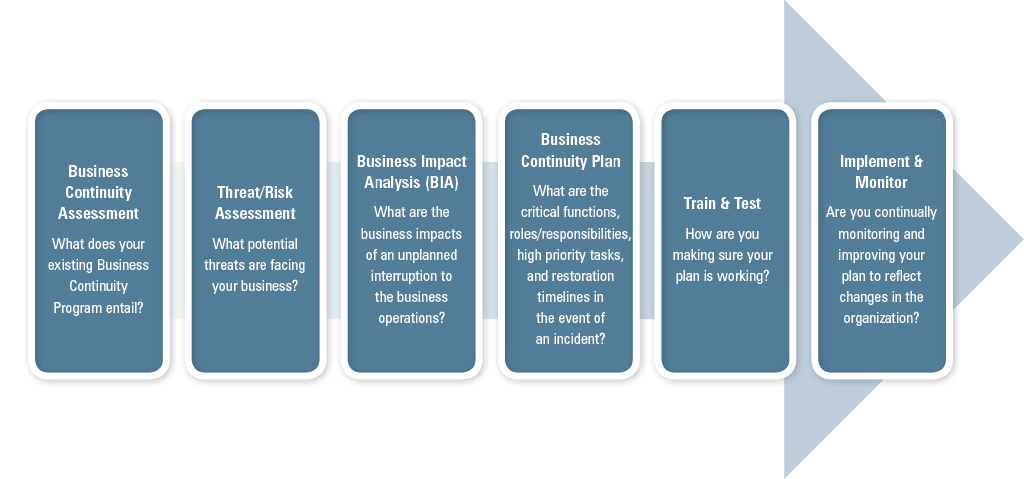

Systematic Approach

A sound Business Continuity Program relies on a systematic approach to identify and critically evaluate risks/opportunities, as outlined below. This approach broadens the scope of issues beyond mere emergency response and allows companies to budget for and secure the necessary resources to support critical business activities before, during, and after a major crisis event. Ultimately, following this process helps companies to stay in business through a time of crisis.

Sustaining Business for the Long Term

Sustainability is about staying in business for the long term, and today, business continuity is key to sustaining business over time. That is because a well-developed and implemented Business Continuity Plan:

- Keeps employees and the community safe when an incident occurs

- Protects the organization’s important assets (e.g., people, technology, products, services)

- Reduces disruption to critical functions in order to limit financial impacts due to loss of product/service

- Reduces adverse publicity, loss of credibility, and loss of customers

- Reduces legal liability and regulatory exposure

- Reduces the risk of losing critical business data (e.g., historical, operational, customer, regulatory compliance)

- Provides for an orderly and timely recovery by allowing critical decisions to be made in a non-crisis mode

- Helps companies mitigate risks and focus on the future

*****

Guiding Standards

ISO 22301: Societal Security – Business Continuity Management Systems is specifically designed to help organizations protect against, reduce the likelihood of occurrence, prepare for, respond to, and recover from disruptive incidents when they arise. Like other ISO standards, ISO 22301 applies the Plan-Do-Check-Act/Adjust model to developing, implementing, and continually improving a Business Continuity Management System. Following this internationally recognized standard allows organizations to leverage their existing management systems and ensure consistency with any other ISO management system standards that may already be in place (e.g., ISO 14001 – environment, ISO 9001 – quality, ISO 45001 – safety, ISO 22000 – food safety).

The American Society for Industrial Security (ASIS) Business Continuity Management System Standard, National Fire Protection Association (NFPA) 1600: Standard on Disaster/Emergency Management and Business Continuity Programs, and Office of the Comptroller of the Currency (OCC) federal banking requirements for business continuity provide further industry-specific guidance on business continuity management.

Environment / Quality / Safety

Comments: No Comments

Using Data Analysis for Business Decisions

Today’s business managers face greater complexities than ever when it comes to making business decisions. For every business decision, there are a number of factors that impact the associated risks. Fortunately, the use of statistics, predictive analytics, and data mining has become increasingly useful in taking the “gut feel” out of making important and often complex business decisions.

Data-Driven Decisions

Most people are familiar with common descriptive statistical techniques, like measures of central tendency (e.g., mean, median, mode) or variability (e.g., interquartile range, standard deviation). More advanced data mining and predictive analytical techniques are increasingly being used to explore and investigate past performance to gain insight for future business decision making.

Data mining draws on large amounts of data to identify patterns, which are often classified as opportunities or risks. Predictive analytics encompasses a variety of statistical techniques that are used to analyze historical data to predict the most probable future events. A few examples of these include the following:

- Discriminant Analysis – a machine learning model where a computer program “learns” a pre-existing data set that includes attributes and outcomes for each individual, and then predicts probable outcomes for individuals in the new data set based on attributes.

- Linear Regression – creates an equation so that one variable can be predicted based on the known values of other variables.

- Logistic Regression – a machine learning model where a computer program “learns” a pre-existing data set that includes attributes and a binary (“yes/no”) outcome for each individual, then predicts “yes/no” outcome for each individual in a new data set, along with a probability associated with the decision.

- Decision trees – machine learning model where a computer program “learns” a pre-existing data set that includes attributes and outcomes (not necessarily binary) for each individual, then predicts outcomes for each individual in a new data set, along with confidence in the decision; also identifies the attributes that are most helpful for making predictions (i.e., those that are best able to discriminate between outcomes).

- Neural networks – similar to decision tree, but more effective if finding the connections between attributes is a concern.

Together, this information can help decision makers to predict the outcome(s) of a decision before it is made—and make smarter decisions based on data instead of gut feelings. The following case studies demonstrate the value that statistics provide when it comes to making important business decisions.

Case Study: Wildfire Risk Index

For a large transportation organization, wildfires have historically presented a unique challenge. The company has worked diligently over the past several years to control its fire risk through research and a number of assessments. To help further minimize the wildfire risk, the company turned to past data and is working with Kestrel to develop a comprehensive Wildfire Risk Index to:

- Quantify the operational risks of wildfires (i.e., identify environmental conditions, determine areas of concern)

- Make informed business decisions to help minimize identified risks

Creating the Index requires a significant amount of data from both internal and external resources, including traffic, weather, geography, internal fire incidents, and others. This information is used in several components contained within two main models that create the Wildfire Risk Index. These model components are relatively simple when used on their own. The complexity arises when combining the various models and their components into a single Wildfire Risk Index that reasonably reflects relative risks, while considering all variables.

The ultimate output of the Wildfire Risk Index is a single number that quantifies the relative risk of wildfire by location and by month. This information will help the company to:

- Identify the areas of greatest risk.

- Focus resources on those areas.

- Make more informed decisions regarding operations—like when to plan hot work and when and where to perform vegetation control—to help prevent future incidents.

Case Study: Incident Data

For a large petroleum refining organization, safety and environmental incidents present a significant risk to operations. In order to reduce incident frequency, the company has implemented a robust safety management system, which includes frequent audits and inspections. Despite the company’s best efforts, however, incidents have continued to occur.

To further improve safety and environmental performance, Kestrel is working with the company to conduct detailed reviews of previous incidents using Kestrel’s proprietary Human Performance Reliability (HPR) approach. This approach identifies and classifies the human factors contributing to incidents, as well as the controls associated with those human factors (engineered, administrative, and/or PPE). Once the reviews are finished, the results are statistically analyzed to generate a prioritized list of human factors to be addressed. Kestrel’s Human Factors Integration Tool (HFIT™) software then generates a list of existing controls associated with the top human factors, as well as a list of missing controls that could be created and implemented.

The ultimate output of the incident review process is to help the company identify the human factors contributing to incidents, create or improve associated controls, manage operational risks, and protect the health and safety of workers and the surrounding environment.

Versatility

These examples demonstrate how predictive analytics can be used to support decision making. The versatility of predictive analytics, combined with the variety of statistical techniques available, can be applied to help companies analyze a wide variety of problems and gain insight for future business decision making.

Comments: No Comments

Making Your SMS Work for You

A Safety Management System (SMS) is a systematic organization of policies, processes, programs, procedures, and records. Like other management systems, an SMS is built on the Plan, Do, Check, Act/Adjust (PDCA) cycle. Ideally, safety-related activities are planned, done, checked (through auditing, inspections, investigations), and finally reviewed by both local and executive management to facilitate continuous improvement (i.e., adjust).

Keys to a Successful SMS

The primary purpose of an SMS is to effectively manage safety-related risks. But how does an organization ensure that its SMS—new or existing—actually does this? Kestrel has compiled the following best practice tips for implementing an effective SMS:

- All employees with management or supervisory responsibilities must be visibly and conspicuously committed to safety and the SMS. Management demonstrates leadership and promotes commitment to improving safety performance through active and visible participation. It is up to management to routinely demonstrate that this is not just the “flavor of the month” but the organization’s way of doing business. (Choudhry, Fang & Ahmed, 2008; Hansen, 2006; Lyon & Hollcroft, 2006; OSHA, 2015)

- Employees are engaged in the SMS—emotionally and cognitively. Employees must understand how the SMS works and believe in the value that it offers them and the organization. (Wachter & Yorio, 2014; Moraru, Babut & Cioca, 2011)

- The SMS is integrated into other business objectives and aligned with other in-place management systems (e.g., quality, environmental). The SMS should support the company’s goals and objectives. Aligning and integrating with other systems further improves efficiency, consistency, and understanding. This also provides the flexibility needed to function in a dynamic business environment. (Hansen, 2006)

- There are clearly defined safety policies and principles. Policies should be established, communicated, and updated, as necessary. (Hansen, 2006)

- The SMS establishes challenging objectives, goals, and plans. High standards of performance that are tracked and measured ultimately lead to performance improvements. (Hansen, 2006; OSHA, 2015)

- Contractors and other third parties are effectively managed. Contractors, suppliers, and others must be assessed and monitored for their capabilities and performance. Clear performance standards should be established to ensure that these third parties meet needs and uphold safety management expectations. (Hansen, 2006; OSHA, 2015)

- The SMS ensures compliance with legal and other requirements. The SMS should help the organization to measure and verify compliance with applicable legal and regulatory requirements. (Hansen, 2006)

- There is effective communication about the SMS, including clearly defined roles and responsibilities. Employees need to understand the purpose of the SMS and their roles in achieving related goals and objectives. (Hansen, 2006; OSHA, 2015)

- Staff receive continuous safety training and development opportunities. Safe operations rely on well-trained employees and contractors who understand the SMS and how to perform their jobs in the safest ways possible. (Choudhry, Fang & Ahmed, 2008; Moraru, Babut & Cioca, 2011; Lyon & Hollcroft, 2006; OSHA, 2015)

- The organization is committed to hazard identification, risk assessment, and implementing effective controls. Identifying, assessing, and prioritizing hazards can mitigate risks to employees, customers, contractors, and the general public. Procedures should be put into place to continually identify workplace hazards and evaluate risks. Doing so must be a continuous process with periodic inspections to identify new hazards. (Hansen, 2006; OSHA, 2015)

- The organization conducts injury and incident investigations, produces reports, and follows through on corrective actions. Effective incident investigations provide the opportunity to learn about and improve safety performance. Investigations should identify the root cause and contributing factors, determine and track corrective actions, and share lessons learned across the organization to prevent recurrence. Perhaps most importantly, the organization should refrain from using the investigation to figure out who to blame for the incident. Fault-finding, rather than fact-finding, leads to mistrust and a negative safety culture. (Singh, 2014; OSHA, 2015)

- Audits provide the opportunity for ongoing re-evaluation and to demonstrate a strong commitment to continuous improvement. The SMS must be regularly reviewed to ensure that it is delivering consistent, desired performance. Planning and implementing internal audits helps verify whether safety processes and activities are meeting goals and creating the desired outcomes. Audits also help determine the effectiveness of the SMS and uncover new opportunities to systematically guide the PDCA continual improvement process. Sharing best practices and lessons learned further promotes ongoing improvement. (Hansen, 2006; Choudhry, Fang & Ahmed, 2008)

- Risk-based, data-driven decision-making is informed by both leading and lagging indicators. While lagging indicators provide valuable information for SMS improvement, leading indicators provide that information without waiting until someone gets hurt. Advanced statistical techniques and predictive analytics can help predict where and when an incident will happen based on leading indicators. Organizations can make drastic safety performance improvements by making a strategic, sustainable investment in gathering and analyzing leading indicators.

- Implementation is guided from the top down; buy-in is obtained in all levels of the organization. Ownership of the SMS resides with the safety department and executive management, while ownership of implementation and performance resides with all departments and operations. Safety should be continually reinforced as a line-organization responsibility. (OSHA, 2015; Choudhry, Fang & Ahmed, 2008; Moraru, Babut & Cioca, 2011)

- The SMS builds on and improves what already exists. The SMS should fit within the organization’s existing business structure and be tailored to the organization’s needs, operations, risks, processes, culture, and existing strengths.

SMS Benefits

For organizations that are able to implement a strong SMS, there can be many benefits. For example, the Health and Safety Executive in the UK (Greenstreet Berman Ltd, 2006) published six case studies in 2006 illustrating the benefits of implementing an SMS. Some of the business benefits identified in these case studies included the following:

- 50% reduction in absenteeism

- Static or decreased insurance premiums

- Access to wider market based on improved safety outcomes

Another case study published in 2010 describes Newell Rubbermaid’s SMS success, as the company realized an 80% reduction in recordables and an 81% reduction in workers compensation costs after implementing a proactive SMS (Zahn, 2010).

In general, most organizations that adhere to the best practices described above may realize:

- Improved health and safety performance and compliance

- Greater operational efficiency

- Reduced injuries and injury-related costs

- Lower insurance premiums by demonstrating to insurers that risk is effectively controlled

- Better morale when employees see employers actively looking after their health and safety

- Improved reputation that comes with the public noticing the organization’s responsible attitude toward employees

- Improved business efficiency and, correspondingly, reduced costs

Comments: No Comments

Know Where You Stand: Facility Safety

Strong safety performance is a cornerstone of any business. For many companies, it can make the difference in being qualified to work with customers and successfully expand the business. On the other end of the spectrum, repeated safety accidents can lead to potential serious penalties and higher insurance rates for failing to comply with OSHA safety requirements.

Safe facilities, work practices, and training help to attract and retain employees and enable them to go home at the end of the shift without workplace injury or concerns. In addition, workers’ compensation rates and the ability to maintain adequate insurance both depend on an organization’s safety performance.

OSHA Safety Compliance and Inspection

Safety compliance with federal regulations is getting much more attention by the Occupational Safety and Health Administration (OSHA) due, in part, to a variety of significant accidents occurring over recent years. Many relate to the manufacturing in high risk industries where the impacts have caused injury, evacuation, environmental impacts, and significant business disruption. While not frequent, accidents like this draw attention from the public and communities, news media, regulators, and regulatory agencies. This, in turn, has increased scrutiny on all businesses, especially those in high risk industries or having a history of safety issues. When it comes to safety compliance, an organization should never be overly confident.

Increased inspections for non-compliance with safety regulations have been emphasized. OSHA tracks the types of safety violations found and their classification as “serious” or as “willful”. A serious violation is defined as “one in which there is a substantial probability that death or serious physical harm could result, and the employer knew or should have known of the hazard”. A willful violation is defined as one “committed with an intentional disregard of or plain indifference to the requirements of the Occupational Safety and Health Act and requirements”. Willful violations can also be applied to multiple plants in the same company.

The top 10 “serious” violations reported by OSHA for federal FY 2018 were:

- Fall Protection

- Scaffolding

- Hazard Communication

- Ladders

- Lockout/Tagout

- Respiratory Protection

- Machine Guarding

- Powered Industrial Trucks

- Fall Protection – Training Requirements

- Personal Protective and Life Saving Equipment – Eye and Face Protection

All of these violations can be associated with the typical equipment and practices associated with manufacturing operations or other physical plant activities for maintaining processes and equipment.

Willful violations can result in significantly greater penalties, with related fines tripled should a violation be classified as in this category, and even greater penalties for multiple plant violations under the same corporate ownership.

The top 10 “willful” violations reported by OSHA for FY 2018 were:

- Fall Protection

- Lockout/Tagout

- Grain Handling Facilities

- Requirements for Protective Systems

- Respiratory Protection

- Machine Guarding

- Fall Protection – Training Requirements

- Mechanical Power-Transmission Apparatus

- Hazard Communication

- Permit-Required Confined Spaces

A best practice recommendation is that plant management should take the opportunity to review this list and validate their understanding of the related regulations and the level of compliance at their plant(s).

Opportunities for Improvement

Each of these standards represents an opportunity for assessment and improvement for plant managers and owners in the industry. Violations in these standards can take a variety of forms, including a failure to have not only the appropriate procedures, but necessary updates, training, internal inspection and recordkeeping. Be aware that vulnerability in compliance could be associated with evolving changes, including time, process, personnel, and materials. This is also known as “management of chain” necessary for maintaining and keeping programs current with requirements.

The question for plant owners and managers is, “What should we do now to both meet the requirements and establish a plan should we be confronted with an OSHA inspection?” These represent two related activities that need to be addressed to best ensure that the company can and will achieve the best in both situations.

Taking Action

When it comes to determining the level of your existing OSHA programs against compliance issues and potential accidents, there needs to be both short-term and longer-term actions. The goal of the actions will be to provide the results of what will need to change in your OSHA compliance programs. There will be a range of results depending on how well the programs were developed, implemented, and updated. Regardless, the goal is to provide a means to assess and make this determination. We recommend taking the following short-term (if not immediate) actions:

- Schedule a meeting with key management and safety and health staff to develop a plan to review and confirm all OSHA programs for compliance to the standard. It is not uncommon for internal audits and insurance company audits to be based on confirmation of a compliance policy and not the level of detail required in the implementation of the policy. In the meeting, a complete review of programs should be delegated, including a review of the OSHA standards themselves. This should be based on criteria, including the level of detail, training, and updates and supporting logs/records.

- As part of this process, conduct a review of the last three years of internal and loss prevention reports, including local fire. The findings of these should be compared against review findings to verify that necessary changes have been implemented.

- The review should include a review of the OSHA reporting and recordkeeping requirements. Again, this should be reviewed based on three years of historical records. Not only should these records be verified to be accurate and complete, but each accident should have resulted in a corrective action of either an unsafe situation or the retraining of an employee. This information should be checked, as well, and any additional actions made to fully comply.

- Should you have had any past OSHA inspections at your facility or if you have multiple locations, you need to ensure that proper actions were taken to close these issues. Again, it is very important to verify and assemble all of the related records.

- You will need to require that multiple physical walk-troughs are conducted for all work, personal, and administrative areas for compliance. This should be done by updating or creating a safety inspection checklist for each section, area, and department to confirm that there are no violations in manufacturing and administrative areas alike. Note that one area often missed is the adequate spacing and egress from office cubicles and file rooms.

- Your review should lead to the determination of corrective actions of your programs. These corrective actions need to result in immediate “short-term” updates and implementation of your safety programs. This needs to be announced and included in the training that will need to be scheduled and documented.

- Concurrently, a qualified person should be assigned to compare the review findings, your accident statistics and the top OSHA violations. If there are common links to programs, statistics, and OSHA violations report, an added level of scrutiny should be placed on this area and the resulting program updates.

- Ensure that all of the basic requirements are met and in compliance, including accident/injury records, training records, inspection logs, and a log for all program updates implemented.

Inspection Readiness

Provided that the items above are done correctly will greatly reduce your risk for compliance issues and create a safer work environment for your employees for the short term. To fully verify the changes, a confirming review or audit should be completed within a 30-45-day strict timeframe, and any additional changes or training should be completed immediately. This will also put you in a much better position for an OSHA inspection should you be identified to require one. Some additional points to readiness for an OSHA inspection include the following:

- Ensure that you have or develop a policy for a regulatory inspection that can be implemented immediately. This would cover OSHA and other possible inspections.

- Assign a designate with responsibility for representing the company for an OSHA inspection. Until the designate is ready for an inspection, the inspector should be kept in a neutral office or conference room and away from all levels of activity.

- The tour should be as that for any other visitor. Ensure that the inspector wears all of the required PPE at all points during the tour and is provided all of the awareness required for all personnel. The inspector should never be left alone and not allowed to disrupt the work activities in any way or at anytime.

- Develop a list of the do’s and don’ts of information to be provided or shared with an inspector. When in doubt, decline to answer until the proper answer can be determined. This list should include what the inspector is entitled to request and what you do and do not have to provide. Say very little and even less if asked.

- A copy of all information, notes, photos, and videos should be kept for the company records, and the designate should ask the inspector to answer any related questions.

- Note that if the inspection carries for multiple days, it will provide the designate to verify and communicate specific concerns for direction from senior management or counsel.

- At the closing conference, the inspector will take time for a write-up and to determine apparent violations. During this presentation, you need to challenge any such issues and take the position that there are no violations.

- The inspector will take several weeks to formalize the report, during which time you are not required to answer any follow-up calls.

This information presents a briefing on handling a typical OSHA inspection. Certain events could change how you handle an inspection in follow-up to an accident. Additionally, note that if you conduct an OSHA Program review and make the proper changes, you will be in much better readiness condition for an OSHA inspection. Regardless, the planning and demeanor of the designated contact for the inspection should be the same.

Owners and managers of companies need to focus on prevention and on the overall culture of the company in terms of taking the necessary steps to reduce risk and make prevention part of daily operations. Good practice is to examine the workplace broadly, identifying and assessing hazards, and developing and implementing appropriate controls. This helps ensure employees are protected in the workplace and regulatory compliance is achieved.

Comments: No Comments

OSHA Final Rule: Electronic Recordkeeping

On January 24, 2019, OSHA published the final rule revising the Improve Tracking of Workplace Injuries and Illnesses regulation. This rule rescinds the requirements for establishments with 250 or more employees to electronically file information from OSHA Forms 300 and 301. These establishments will continue to submit information from their Form 300A. In short, all covered employers must electronically submit, as follows:

- Establishments with 250 or more employees that are subject to OSHA’s recordkeeping regulation must electronically submit to OSHA some of the information from the Log of Work-Related Injuries and Illnesses (OSHA Form 300), the Summary of Work-Related Injuries and Illnesses (OSHA Form 300A), and the Injury and Illness Incident Report (OSHA Form 301).

- Establishments with 20-249 employees in certain high-risk industries must electronically submit to OSHA some of the information from the Summary of Work-Related Injuries and Illnesses (OSHA Form 300A).

- Establishments with fewer than 20 employees at all times during the year do not have to routinely submit information electronically to OSHA.

In the final rule, OSHA emphasized the new rule does not change any employer’s obligation to complete and retain injury and illness records under OSHA’s regulations for recording and reporting occupational injuries and illnesses.

Additionally, OSHA is amending the regulation to require employers to submit their Employer Identification Number (EIN) electronically as part of their submission to enhance data and further improve worker safety and health.

Post Your OSHA 300A by February 1

Do not forget! The OSHA Form 300A log must be posted every year by February 1, summarizing all injuries from the previous year. The log must be visible from February 1 until April 30, and must be signed by a company executive or authorized representative, indicating that the information is true and accurate.