Comments: No Comments

Making Your SMS Work for You

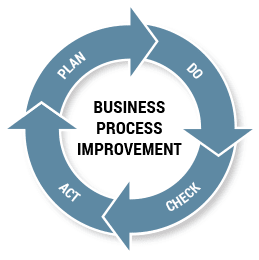

A Safety Management System (SMS) is a systematic organization of policies, processes, programs, procedures, and records. Like other management systems, an SMS is built on the Plan, Do, Check, Act/Adjust (PDCA) cycle. Ideally, safety-related activities are planned, done, checked (through auditing, inspections, investigations), and finally reviewed by both local and executive management to facilitate continuous improvement (i.e., adjust).

Keys to a Successful SMS

The primary purpose of an SMS is to effectively manage safety-related risks. But how does an organization ensure that its SMS—new or existing—actually does this? Kestrel has compiled the following best practice tips for implementing an effective SMS:

- All employees with management or supervisory responsibilities must be visibly and conspicuously committed to safety and the SMS. Management demonstrates leadership and promotes commitment to improving safety performance through active and visible participation. It is up to management to routinely demonstrate that this is not just the “flavor of the month” but the organization’s way of doing business. (Choudhry, Fang & Ahmed, 2008; Hansen, 2006; Lyon & Hollcroft, 2006; OSHA, 2015)

- Employees are engaged in the SMS—emotionally and cognitively. Employees must understand how the SMS works and believe in the value that it offers them and the organization. (Wachter & Yorio, 2014; Moraru, Babut & Cioca, 2011)

- The SMS is integrated into other business objectives and aligned with other in-place management systems (e.g., quality, environmental). The SMS should support the company’s goals and objectives. Aligning and integrating with other systems further improves efficiency, consistency, and understanding. This also provides the flexibility needed to function in a dynamic business environment. (Hansen, 2006)

- There are clearly defined safety policies and principles. Policies should be established, communicated, and updated, as necessary. (Hansen, 2006)

- The SMS establishes challenging objectives, goals, and plans. High standards of performance that are tracked and measured ultimately lead to performance improvements. (Hansen, 2006; OSHA, 2015)

- Contractors and other third parties are effectively managed. Contractors, suppliers, and others must be assessed and monitored for their capabilities and performance. Clear performance standards should be established to ensure that these third parties meet needs and uphold safety management expectations. (Hansen, 2006; OSHA, 2015)

- The SMS ensures compliance with legal and other requirements. The SMS should help the organization to measure and verify compliance with applicable legal and regulatory requirements. (Hansen, 2006)

- There is effective communication about the SMS, including clearly defined roles and responsibilities. Employees need to understand the purpose of the SMS and their roles in achieving related goals and objectives. (Hansen, 2006; OSHA, 2015)

- Staff receive continuous safety training and development opportunities. Safe operations rely on well-trained employees and contractors who understand the SMS and how to perform their jobs in the safest ways possible. (Choudhry, Fang & Ahmed, 2008; Moraru, Babut & Cioca, 2011; Lyon & Hollcroft, 2006; OSHA, 2015)

- The organization is committed to hazard identification, risk assessment, and implementing effective controls. Identifying, assessing, and prioritizing hazards can mitigate risks to employees, customers, contractors, and the general public. Procedures should be put into place to continually identify workplace hazards and evaluate risks. Doing so must be a continuous process with periodic inspections to identify new hazards. (Hansen, 2006; OSHA, 2015)

- The organization conducts injury and incident investigations, produces reports, and follows through on corrective actions. Effective incident investigations provide the opportunity to learn about and improve safety performance. Investigations should identify the root cause and contributing factors, determine and track corrective actions, and share lessons learned across the organization to prevent recurrence. Perhaps most importantly, the organization should refrain from using the investigation to figure out who to blame for the incident. Fault-finding, rather than fact-finding, leads to mistrust and a negative safety culture. (Singh, 2014; OSHA, 2015)

- Audits provide the opportunity for ongoing re-evaluation and to demonstrate a strong commitment to continuous improvement. The SMS must be regularly reviewed to ensure that it is delivering consistent, desired performance. Planning and implementing internal audits helps verify whether safety processes and activities are meeting goals and creating the desired outcomes. Audits also help determine the effectiveness of the SMS and uncover new opportunities to systematically guide the PDCA continual improvement process. Sharing best practices and lessons learned further promotes ongoing improvement. (Hansen, 2006; Choudhry, Fang & Ahmed, 2008)

- Risk-based, data-driven decision-making is informed by both leading and lagging indicators. While lagging indicators provide valuable information for SMS improvement, leading indicators provide that information without waiting until someone gets hurt. Advanced statistical techniques and predictive analytics can help predict where and when an incident will happen based on leading indicators. Organizations can make drastic safety performance improvements by making a strategic, sustainable investment in gathering and analyzing leading indicators.

- Implementation is guided from the top down; buy-in is obtained in all levels of the organization. Ownership of the SMS resides with the safety department and executive management, while ownership of implementation and performance resides with all departments and operations. Safety should be continually reinforced as a line-organization responsibility. (OSHA, 2015; Choudhry, Fang & Ahmed, 2008; Moraru, Babut & Cioca, 2011)

- The SMS builds on and improves what already exists. The SMS should fit within the organization’s existing business structure and be tailored to the organization’s needs, operations, risks, processes, culture, and existing strengths.

SMS Benefits

For organizations that are able to implement a strong SMS, there can be many benefits. For example, the Health and Safety Executive in the UK (Greenstreet Berman Ltd, 2006) published six case studies in 2006 illustrating the benefits of implementing an SMS. Some of the business benefits identified in these case studies included the following:

- 50% reduction in absenteeism

- Static or decreased insurance premiums

- Access to wider market based on improved safety outcomes

Another case study published in 2010 describes Newell Rubbermaid’s SMS success, as the company realized an 80% reduction in recordables and an 81% reduction in workers compensation costs after implementing a proactive SMS (Zahn, 2010).

In general, most organizations that adhere to the best practices described above may realize:

- Improved health and safety performance and compliance

- Greater operational efficiency

- Reduced injuries and injury-related costs

- Lower insurance premiums by demonstrating to insurers that risk is effectively controlled

- Better morale when employees see employers actively looking after their health and safety

- Improved reputation that comes with the public noticing the organization’s responsible attitude toward employees

- Improved business efficiency and, correspondingly, reduced costs

Comments: No Comments

Know Where You Stand: Facility Safety

Strong safety performance is a cornerstone of any business. For many companies, it can make the difference in being qualified to work with customers and successfully expand the business. On the other end of the spectrum, repeated safety accidents can lead to potential serious penalties and higher insurance rates for failing to comply with OSHA safety requirements.

Safe facilities, work practices, and training help to attract and retain employees and enable them to go home at the end of the shift without workplace injury or concerns. In addition, workers’ compensation rates and the ability to maintain adequate insurance both depend on an organization’s safety performance.

OSHA Safety Compliance and Inspection

Safety compliance with federal regulations is getting much more attention by the Occupational Safety and Health Administration (OSHA) due, in part, to a variety of significant accidents occurring over recent years. Many relate to the manufacturing in high risk industries where the impacts have caused injury, evacuation, environmental impacts, and significant business disruption. While not frequent, accidents like this draw attention from the public and communities, news media, regulators, and regulatory agencies. This, in turn, has increased scrutiny on all businesses, especially those in high risk industries or having a history of safety issues. When it comes to safety compliance, an organization should never be overly confident.

Increased inspections for non-compliance with safety regulations have been emphasized. OSHA tracks the types of safety violations found and their classification as “serious” or as “willful”. A serious violation is defined as “one in which there is a substantial probability that death or serious physical harm could result, and the employer knew or should have known of the hazard”. A willful violation is defined as one “committed with an intentional disregard of or plain indifference to the requirements of the Occupational Safety and Health Act and requirements”. Willful violations can also be applied to multiple plants in the same company.

The top 10 “serious” violations reported by OSHA for federal FY 2018 were:

- Fall Protection

- Scaffolding

- Hazard Communication

- Ladders

- Lockout/Tagout

- Respiratory Protection

- Machine Guarding

- Powered Industrial Trucks

- Fall Protection – Training Requirements

- Personal Protective and Life Saving Equipment – Eye and Face Protection

All of these violations can be associated with the typical equipment and practices associated with manufacturing operations or other physical plant activities for maintaining processes and equipment.

Willful violations can result in significantly greater penalties, with related fines tripled should a violation be classified as in this category, and even greater penalties for multiple plant violations under the same corporate ownership.

The top 10 “willful” violations reported by OSHA for FY 2018 were:

- Fall Protection

- Lockout/Tagout

- Grain Handling Facilities

- Requirements for Protective Systems

- Respiratory Protection

- Machine Guarding

- Fall Protection – Training Requirements

- Mechanical Power-Transmission Apparatus

- Hazard Communication

- Permit-Required Confined Spaces

A best practice recommendation is that plant management should take the opportunity to review this list and validate their understanding of the related regulations and the level of compliance at their plant(s).

Opportunities for Improvement

Each of these standards represents an opportunity for assessment and improvement for plant managers and owners in the industry. Violations in these standards can take a variety of forms, including a failure to have not only the appropriate procedures, but necessary updates, training, internal inspection and recordkeeping. Be aware that vulnerability in compliance could be associated with evolving changes, including time, process, personnel, and materials. This is also known as “management of chain” necessary for maintaining and keeping programs current with requirements.

The question for plant owners and managers is, “What should we do now to both meet the requirements and establish a plan should we be confronted with an OSHA inspection?” These represent two related activities that need to be addressed to best ensure that the company can and will achieve the best in both situations.

Taking Action

When it comes to determining the level of your existing OSHA programs against compliance issues and potential accidents, there needs to be both short-term and longer-term actions. The goal of the actions will be to provide the results of what will need to change in your OSHA compliance programs. There will be a range of results depending on how well the programs were developed, implemented, and updated. Regardless, the goal is to provide a means to assess and make this determination. We recommend taking the following short-term (if not immediate) actions:

- Schedule a meeting with key management and safety and health staff to develop a plan to review and confirm all OSHA programs for compliance to the standard. It is not uncommon for internal audits and insurance company audits to be based on confirmation of a compliance policy and not the level of detail required in the implementation of the policy. In the meeting, a complete review of programs should be delegated, including a review of the OSHA standards themselves. This should be based on criteria, including the level of detail, training, and updates and supporting logs/records.

- As part of this process, conduct a review of the last three years of internal and loss prevention reports, including local fire. The findings of these should be compared against review findings to verify that necessary changes have been implemented.

- The review should include a review of the OSHA reporting and recordkeeping requirements. Again, this should be reviewed based on three years of historical records. Not only should these records be verified to be accurate and complete, but each accident should have resulted in a corrective action of either an unsafe situation or the retraining of an employee. This information should be checked, as well, and any additional actions made to fully comply.

- Should you have had any past OSHA inspections at your facility or if you have multiple locations, you need to ensure that proper actions were taken to close these issues. Again, it is very important to verify and assemble all of the related records.

- You will need to require that multiple physical walk-troughs are conducted for all work, personal, and administrative areas for compliance. This should be done by updating or creating a safety inspection checklist for each section, area, and department to confirm that there are no violations in manufacturing and administrative areas alike. Note that one area often missed is the adequate spacing and egress from office cubicles and file rooms.

- Your review should lead to the determination of corrective actions of your programs. These corrective actions need to result in immediate “short-term” updates and implementation of your safety programs. This needs to be announced and included in the training that will need to be scheduled and documented.

- Concurrently, a qualified person should be assigned to compare the review findings, your accident statistics and the top OSHA violations. If there are common links to programs, statistics, and OSHA violations report, an added level of scrutiny should be placed on this area and the resulting program updates.

- Ensure that all of the basic requirements are met and in compliance, including accident/injury records, training records, inspection logs, and a log for all program updates implemented.

Inspection Readiness

Provided that the items above are done correctly will greatly reduce your risk for compliance issues and create a safer work environment for your employees for the short term. To fully verify the changes, a confirming review or audit should be completed within a 30-45-day strict timeframe, and any additional changes or training should be completed immediately. This will also put you in a much better position for an OSHA inspection should you be identified to require one. Some additional points to readiness for an OSHA inspection include the following:

- Ensure that you have or develop a policy for a regulatory inspection that can be implemented immediately. This would cover OSHA and other possible inspections.

- Assign a designate with responsibility for representing the company for an OSHA inspection. Until the designate is ready for an inspection, the inspector should be kept in a neutral office or conference room and away from all levels of activity.

- The tour should be as that for any other visitor. Ensure that the inspector wears all of the required PPE at all points during the tour and is provided all of the awareness required for all personnel. The inspector should never be left alone and not allowed to disrupt the work activities in any way or at anytime.

- Develop a list of the do’s and don’ts of information to be provided or shared with an inspector. When in doubt, decline to answer until the proper answer can be determined. This list should include what the inspector is entitled to request and what you do and do not have to provide. Say very little and even less if asked.

- A copy of all information, notes, photos, and videos should be kept for the company records, and the designate should ask the inspector to answer any related questions.

- Note that if the inspection carries for multiple days, it will provide the designate to verify and communicate specific concerns for direction from senior management or counsel.

- At the closing conference, the inspector will take time for a write-up and to determine apparent violations. During this presentation, you need to challenge any such issues and take the position that there are no violations.

- The inspector will take several weeks to formalize the report, during which time you are not required to answer any follow-up calls.

This information presents a briefing on handling a typical OSHA inspection. Certain events could change how you handle an inspection in follow-up to an accident. Additionally, note that if you conduct an OSHA Program review and make the proper changes, you will be in much better readiness condition for an OSHA inspection. Regardless, the planning and demeanor of the designated contact for the inspection should be the same.

Owners and managers of companies need to focus on prevention and on the overall culture of the company in terms of taking the necessary steps to reduce risk and make prevention part of daily operations. Good practice is to examine the workplace broadly, identifying and assessing hazards, and developing and implementing appropriate controls. This helps ensure employees are protected in the workplace and regulatory compliance is achieved.

Comments: No Comments

OSHA Final Rule: Electronic Recordkeeping

On January 24, 2019, OSHA published the final rule revising the Improve Tracking of Workplace Injuries and Illnesses regulation. This rule rescinds the requirements for establishments with 250 or more employees to electronically file information from OSHA Forms 300 and 301. These establishments will continue to submit information from their Form 300A. In short, all covered employers must electronically submit, as follows:

- Establishments with 250 or more employees that are subject to OSHA’s recordkeeping regulation must electronically submit to OSHA some of the information from the Log of Work-Related Injuries and Illnesses (OSHA Form 300), the Summary of Work-Related Injuries and Illnesses (OSHA Form 300A), and the Injury and Illness Incident Report (OSHA Form 301).

- Establishments with 20-249 employees in certain high-risk industries must electronically submit to OSHA some of the information from the Summary of Work-Related Injuries and Illnesses (OSHA Form 300A).

- Establishments with fewer than 20 employees at all times during the year do not have to routinely submit information electronically to OSHA.

In the final rule, OSHA emphasized the new rule does not change any employer’s obligation to complete and retain injury and illness records under OSHA’s regulations for recording and reporting occupational injuries and illnesses.

Additionally, OSHA is amending the regulation to require employers to submit their Employer Identification Number (EIN) electronically as part of their submission to enhance data and further improve worker safety and health.

Post Your OSHA 300A by February 1

Do not forget! The OSHA Form 300A log must be posted every year by February 1, summarizing all injuries from the previous year. The log must be visible from February 1 until April 30, and must be signed by a company executive or authorized representative, indicating that the information is true and accurate.

Comments: No Comments

How to Effectively Resource Compliance Obligations

Regulatory enforcement, customer and supply chain audits, and internal risk management initiatives are all driving requirements for managing regulatory compliance obligations. Many companies—especially those that are not large enough for a dedicated team of full-time staff—struggle with how to effectively resource their regulatory compliance needs.

Striking a Balance

Using a combination of in-house and outsourced resources can provide the appropriate balance to manage regulatory obligations and maintain compliance.

Outsourcing provides an entire team of resources with a breadth of knowledge/experience and the capacity to complete specific projects, as needed. At the same time, engaging in-house resources allows the organization to optimize staff duties and ensure that critical know-how is being developed internally to sustain compliance into the future.

Programmatic Approach to Compliance Management

Taking a balanced and programmatic approach that relies on internal and external resources and follows the three phases outlined below allows small to mid-size companies to create standardized compliance management solutions and more efficiently:

- Identify issues and gaps in regulatory compliance

- Achieve compliance with current obligations

- Realize improvements to compliance management

- Gain the ability to review and continually improve compliance performance

Phase 1: Compliance Assessment

A compliance assessment provides the baseline to improve compliance management and performance in accordance with current business operations and future plans. The assessment should answer the following questions:

- How complete and robust is the existing compliance management program in comparison with standard industry practice?

- Does it have the capability to yield consistent and reliable regulatory compliance assurance?

- What improvements are needed to consistently and reliably achieve compliance and company objectives?

It is important to understand how complete, well-documented, understood, and implemented the current processes and procedures are. Culture, model, processes, and capacity should all be assessed to determine the company’s overall compliance process maturity.

Phase 2: Compliance and Program Improvements

The initial analysis of the assessment forms the basis for developing recommendations and priorities for an action plan to strengthen programs, building on what already exists. The goal of Phase 2 is to begin closing the compliance gaps identified in Phase 1 by implementing corrective actions, including programs, permits, reports, training, etc.

Phase 2 answers the following questions:

- What needs to be done to address gaps and attain compliance?

- What improvements are required to existing programs?

- What resources are required to sustain compliance?

Phase 3: Ongoing Program Management

The goal of Phase 3 is to improve program processes to eliminate compliance gaps and transition the company from outsourced compliance into compliance process improvement/program development and implementation. This is done by managing the eight functions of compliance—identifying what’s needed, who does it, and when it is due. Ongoing maintenance support may include periodic audits, training, management review assistance, Information Systems (IS) support, and other ongoing compliance activities.

Case Study

For one Kestrel client, business growth has increased at a rate prompting proactive management of the company’s regulatory and compliance obligations. Following a Right-Sized Compliance approach, Kestrel assessed the company’s current compliance status and programs/processes/procedures against regulatory requirements. This initial assessment provided the critical information needed for the Kestrel team to help guide the company’s ongoing compliance improvements.

Coming out of the onsite assessment, Kestrel identified opportunities for improvement. Using industry standard program templates, in combination with operation-specific customization, Kestrel created programs to meet the identified improvement from the assessment. Kestrel then provided onsite training sessions and is working with the company to develop a prioritized action plan for ongoing compliance management.

Using the appropriate methods, processes, and technology tools, Kestrel’s programmatic approach is allowing this company to implement EHS programs that are designed to sustain ongoing compliance, achieve continual improvements, and manage compliance with efficiency through this time of accelerated growth.

Making the Connection

Kestrel’s experience suggests that the connection between management and compliance needs to be well synchronized, with reliable and effective regulatory compliance commonly being an outcome of consistent and reliable program implementation. This connection is especially important to avoid recurring compliance issues.

Following a programmatic approach allows companies to realize improvements to their compliance management and:

- Organize requirements into documented programs that outline procedures, roles/responsibilities, training requirements, etc.

- Support management efforts with technology tools that create efficiencies and improved data management

- Conduct the ongoing monitoring and management that are vital to remain in compliance

- Gain the inherent capacity, capability, and maturity to comply, review, and continually improve compliance performance

Comments: No Comments

Compliance Assurance Review

An audit provides a snapshot in time of a company’s compliance status. An essential component of any compliance program—health and safety, environmental, food safety—an audit captures compliance status and provides the opportunity to identify and correct potential business losses. But what about sustaining ongoing compliance beyond that one point in time? How does a company know if it has the processes in place to ensure ongoing compliance?

Creating a Path to Compliance Assurance

A compliance assurance review looks beyond the “point-in-time” compliance to critically evaluate how the company manages compliance programs, processes, and activities, with compliance assurance as the ultimate goal. It can also be used as a process improvement tool, while ensuring compliance with all requirements applicable to the company.

This type of review is ideal for companies that already have a management system in place or strive to approach compliance with health and safety, environmental, or food safety requirements under a management system framework.

Setting the Scope

The scope of the review is tailored to a company’s needs. It can be approached by:

- Compliance program/topic where the company has had routine compliance failures

- Compliance program/topic that presents a high risk to the company

- Compliance program/topic that spans across multiple facilities that report to a central function

- Location/product line/project where the company is looking to streamline a process while still ensuring compliance with multiple legal and other requirements

While each program, project, or location may differ in breadth of regulatory requirements, enforcement priorities, size, complexity, operational control responsibilities, etc., all compliance assurance reviews progress through a standard process that ties back to the management system.

Continual Compliance Improvements

Through a compliance assurance review, the company will define and understand:

- Compliance requirements and where regulated activities occur throughout the organization

- Current company programs and processes used to manage those activities and the associated level of program/process maturity

- Deficiencies in compliance program management and opportunities for improvement

- How to feed review recommendations back into elements of the management system to create a roadmap for sustaining and continually improving compliance

OSHA: Using Drones for Inspections

What was once a very niche market, drones are emerging into an important new phase: everyday use of drone technology in the workplace. It’s no longer just tech-savvy companies that are using drones. Enterprise-level drone operations are becoming a big deal—and not just in industry. During 2018, the Occupational Safety and Health Administration (OSHA) reportedly used drones to conduct at least nine inspections of employer facilities.

OSHA’s drones were most frequently used following accidents at worksites that were considered too dangerous for OSHA inspectors to enter—a common and important benefit of the drone technology. However, is likely that OSHA’s use of drones will quickly expand to include more routine facility reviews, as drones have the ability to provide OSHA inspectors a detailed view of a facility, expanding the areas that can be easily viewed and reducing the time required to conduct an inspection on the ground.

Employers must currently grant OSHA permission to conduct facility inspections with drones, and there are many implications industry must consider in giving this permission. Read the full article in EHS Today to learn more.

Comments: No Comments

5 Questions to Implement Predictive Analytics

How much data does your company generate associated with your business operations? What do you do with that data? Are you using it to inform your business decisions and, potentially, to avoid future risks?

Predictive Analytics and Safety

Predictive analytics is a field that focuses on analyzing large data sets to identify patterns and predict outcomes to help guide decision-making. Many leading companies currently use predictive analytics to identify marketing and sales opportunities. However, the potential benefits of analyzing data in other areas—particularly safety—are considerable.

For example, safety and incident data can be used to predict when and where incidents are likely to occur. Appropriate data analysis strategies can also identify the key factors that contribute to incident risk, thereby allowing companies to proactively address those factors to avoid future incidents.

Key Questions

Many companies already have large amounts of data they are gathering. The key is figuring out how to use that data intelligently to guide future business decision-making. Here are five key questions to guide you in integrating predictive analytics into your operations.

-

What are you interested in predicting? What risks are you trying to mitigate?

Defining your desired result not only helps to focus the project, it narrows brainstorming of risk factors and data sources. This can—and should—be continually referenced throughout the project to ensure your work is designed appropriately to meet the defined objectives.

-

How do you plan to use the predictions to improve operations? What is your goal of implementing a predictive analytics project?

Thinking beyond the project to overall operational improvements provides bigger picture insights into the business outcomes desired. This helps when identifying the format results should be in to maximize their utility in the field and/or for management. In addition, it helps to ensure that the work focuses on those variables that can be controlled to improve operations. Static variables that can’t be changed mean risks cannot be mitigated.

-

Do you currently collect any data related to this?

Understanding your current data will help determine whether additional data collection efforts are needed. You should be able to describe the characteristics of data you have, if any, for the outcome you want to predict (e.g., digital vs. paper/scanned, quantitative vs. qualitative, accuracy, gathered regularly, precision). The benefits and limitations associated with each of these characteristics will be discussed in a future article.

-

What are some risk factors for the desired outcome? What are some things that may increase or decrease the likelihood that your outcome will happen?

These factors are the variables you will use for prediction in the model. It is valuable to brainstorm with all relevant subject matter experts (i.e., management, operations, engineering, third-parties, as appropriate) to get a complete picture. After brainstorming, narrow risk factors based on availability/quality of data, whether the risk factor can be managed/controlled, and a subjective evaluation of risk factor strength. The modeling process will ultimately suggest which of the risk factors significantly contribute to the outcome.

-

What data do you have, if any, for the risk factors you identified?

Again, you need to understand and be able to describe your current data to determine whether it is sufficient to meet your desired outcomes. Using data that have already been gathered can expedite the modeling process, but only if those data are appropriate in format and content to the process you want to predict. If they aren’t appropriate, using these data in modeling can result in time delays or misleading model results.

The versatility of predictive analytics can be applied to help companies analyze a wide variety of problems when the data and desired project outcomes and business/operational improvements are well-defined. With predictive analytics, companies gain the capacity to:

- Explore and investigate past performance

- Gain the insights needed to turn vast amounts of data into relevant and actionable information

- Create statistically valid models to facilitate data-driven decisions

Comments: No Comments

OSHA Clarifies Position on Incentive Programs

OSHA has issued a memorandum to clarify the agency’s position regarding workplace incentive programs and drug testing. OSHA’s rule prohibiting employer retaliation against employees for reporting work-related injuries or illnesses does not prohibit workplace safety incentive programs or post-incident drug testing. The Department believes that these safety incentive programs and/or post-incident drug testing is done to promote workplace safety and health. Action taken under a safety incentive program or post-incident drug testing policy would violate OSHA’s anti-retaliation rule if the employer took the action to penalize an employee for reporting a work-related injury or illness rather than for the legitimate purpose of promoting workplace safety and health. For more information, see the memorandum

USTR Finalizes China 301 List 3 Tariffs

On Monday, September 17, 2018, the Office of the United States Trade Representative (USTR) released a list of approximately $200 billion worth of Chinese imports, including hundreds of chemicals, that will be subject to additional tariffs. The additional tariffs will be effective starting September 24, 2018, and initially will be in the amount of 10 percent. Starting January 1, 2019, the level of the additional tariffs will increase to 25 percent.

In the final list, the administration also removed nearly 300 items, but the Administration did not provide a specific list of products excluded. Included among the products removed from the proposed list are certain consumer electronics products, such as smart watches and Bluetooth devices; certain chemical inputs for manufactured goods, textiles and agriculture; certain health and safety products such as bicycle helmets, and child safety furniture such as car seats and playpens.

Individual companies may want to review the list to determine the status of Harmonized Tariff Schedule (HTS) codes of interest.

NACD Responsible Distribution Cybersecurity Webinar

Join the National Association of Chemical Distributors (NACD) and Kestrel Principal Evan Fitzgerald for a free webinar on Responsible Distribution Code XIII. We will be taking a deeper dive into Code XIII.D., which focuses on cybersecurity and information. Find out ways to protect your company from this constantly evolving threat.

NACD Responsible Distribution Webinar

Code XIII & Cybersecurity Breaches

Thursday, September 20, 2018

12:00 -1:00 p.m. (EDT)